GPU Cluster - Interconnect HPC Systems with GPU to Form a Cluster

Facing compute-intensive workloads in AI, deep learning, or scientific simulations? A GPU cluster is the ideal solution when a single system reaches its limits. More than just a basic server network, a GPU cluster is a purpose-built setup designed to deliver maximum performance and scalability for graphical and parallel processing tasks. Discover the full versatility of our GPU cluster solutions here.

Here you'll find GPU Cluster

Do you need help?

Simply call us or use our inquiry form.

What is a GPU Cluster?

A GPU cluster consists of GPU computing systems that are loosely coupled and provisioned for specific tasks. For smaller workloads or as an entry point into GPU computing, GPU workstations can alternatively be used as standalone computing systems. As with a conventional cluster, a GPU cluster is composed of five different components:

- Master Server Access to the GPU cluster is controlled via this server. In professional cloud environments, OpenStack often takes on this task to automatically manage and allocate resources.

- GPU Computing Systeme Together, all systems form the individual cluster nodes.

- Common data area All cluster members connected in the GPU cluster have access to this common data area.

- Cluster network In a GPU cluster, the cluster network is also responsible for internal communication in the cluster.

- Operating system Each cluster member is managed by its own operating system.

When are GPU clusters used?

GPU clusters are an effective solution when a clearly defined task can be broken down into multiple subtasks that are processed in parallel using a GPU computing system — for example, in a liquid cooling data center.

- Artificial Intelligence (AI) & Deep Learning: Training complex neural networks requires massive parallel processing power, which can be ideally orchestrated via Kubernetes.

- Scientific Computing & Simulations: For advanced simulations in physics, chemistry, or meteorology.

- High-End Rendering: Used in the film and animation industry to power render farms.

- Big Data Analytics: When large volumes of data need to be processed in minimal time.

Furthermore, a GPU cluster can be used to give several employees access to a specific application in order to complete a task simultaneously and as efficiently as possible.

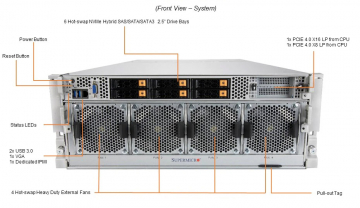

GPU Cluster - Configuration Example with NVIDIA GPUs: For you, we have prepared three different GPU cluster variants per network connection.

| GPU Cluster with TCP/IP network connection | |||

|---|---|---|---|

| GPU CUDA Cores | 32 x 5120 | 16 x 5120 | 8 x 5120 |

| NVIDIA NVLINK | 300 GB/s | 300 GB/s | 300 GB/s |

| GPU Tensor Cores | 32 x 640 | 16 x 640 | 8 x 640 |

| CPU Processors | 16 | 8 | 4 |

| Rack height | 8 | 4 | 2 |

| Memory GPU total | 1024 GB | 512 GB | 265 GB |

| Storage CPU total | 12 TB | 12 TB | 12 TB |

| Data Storage | 16 x M.2 NVMe 16 x 1920 GB | 8 x M.2 NVMe 8 x 1920 GB | 4 x M.2 NVMe 4 x 1920 GB |

| Network | 2 x 10Base-T | 2 x 10Base-T | 2 x 10Base-T |

| GPU Cluster with FDR-Infiniband Network connection | |||

|---|---|---|---|

| Infiniband ERB 100 GB/s | 8 x 100 GB/s | 4 x 100 GB/s | 2 x 100 GB/s |

| Infiniband FDR 56 GB/s | 8 x 56 GB/s | 4 x 56 GB/s | 2 x 56 GB/s |

GPU Cluster Prices - Cluster System Costs at HAPPYWARE

The costs for a full GPU cluster are difficult to estimate. This is mainly due to the fact that the prices for these types of GPU systems always depend on project prices and the number of cluster nodes.

If you want to buy a custom-built GPU cluster, please do not hesitate to contact us. You will receive a fully pre-configured GPU cluster based on your favorite Linux version, implemented at a reasonable price with GPU server systems from Supermicro, ASUS, or GIGABYTE.

Information for educational and research institutes: For GPU clusters that are to be used in public institutions or for research or education purposes, we can offer special terms. If you are in the process of planning a GPU cluster project, please contact us.

From planning to implementation — our expert Jürgen Kabelitz knows what is required through the process. He will be able to answer all of your questions linked to your project.

Buy or rent GPU clusters - HAPPYWARE offers these options

- Individual configuration We design and offer GPU clusters that are optimized for your applications.

-

Vendor-Independent Consulting Our advice is completely vendor-independent, allowing us to recommend the most technically and economically suitable solution for your specific needs.

-

Personal Consultation from Experienced Experts Benefit from our many years of expertise. Our seasoned specialists provide you with personal, knowledgeable support—from planning to implementation.

- Assistance with your decision: Alongside you, we can help determine if buying or renting a GPU cluster is more beneficial for your company. From experience, we know that a purchase is recommended for a planned operating time of more than 12 months.

- Systems with full scalability If you buy a GPU Cluster System from us, you can flexibly expand it later with additional cluster nodes. In this way, the cluster grows with your requirements.

- Financing options We are able to offer different financial solutions for our GPU cluster systems. Please contact us for leasing or hire-purchase options.

If you need more information about our GPU clusters please contact our HPC expert and he will be happy to answer your questions.