NVIDIA Servers: Your Gateway to Superior Compute Power for AI, HPC, and Rendering

Where traditional CPU-based servers hit performance limits, NVIDIA GPU servers deliver a profound performance boost through massive parallel processing. These specialized systems are engineered to exponentially accelerate complex workloads in artificial intelligence (AI), high-performance computing (HPC), and professional visualization.

The result: a dramatic shortening of innovation cycles—computations that once took days now complete in hours, granting you a decisive competitive edge.

At HAPPYWARE, we understand that this level of performance cannot come in a one-size-fits-all package. That’s why for over 25 years, we’ve been configuring GPU systems precisely tailored to your specific workload and use case.

NVIDIA DGX Server

NVIDIA DGX Systems: DGX Spark – complete systems for AI, HPC, and Deep Learning.

Purchase or lease directly from the official NVIDIA partner HAPPYWARE!

NVIDIA HGX Server

NVIDIA HGX: Your modular foundation for high-performance NVIDIA HGX GPU servers.

Optimized for Deep Learning, HPC, and professional AI workloads – with expert guidance and support from HAPPYWARE.

Here you'll find NVIDIA Server

Do you need help?

Simply call us or use our inquiry form.

What is an NVIDIA Server and what is its key advantage?

An NVIDIA GPU Server is far more than just a server equipped with a powerful graphics card. Its real strength lies in the massively parallel architecture of the graphics processing unit (GPU), purpose-built to exponentially accelerate compute-intensive workloads such as AI training, HPC simulations, and professional rendering. This hardware power is further enhanced by a mature software ecosystem like NVIDIA CUDA®, which unlocks the full potential of the GPU for demanding applications.

However, to fully leverage this immense performance and realize the maximum return on investment, you need a perfectly balanced overall system. This is where the key advantage of a HAPPYWARE-configured NVIDIA Server comes into play: instead of a standard off-the-shelf solution, you receive a system where the interaction between CPU, memory, storage, and the GPU precisely matched to your use case is carefully optimized.

Our expertise in configuration ensures that no single component holds back the others. The result is a validated end-to-end solution that guarantees maximum system performance for your investment.

Application Areas: Where NVIDIA GPU Servers Make the Difference

NVIDIA AI servers are a driving force for innovation across many industries. At HAPPYWARE, we configure systems specifically optimized for the following specialized use cases:

- Artificial Intelligence (AI), Machine Learning & Deep Learning Training AI applications is the prime example of GPU acceleration. With specialized compute units such as NVIDIA Tensor Cores, our customers can train generative AI models for speech or image recognition in a fraction of the time. This drastically shortens development cycles, accelerates inference in production, and speeds up the market launch of AI-driven applications.

- High-Performance Computing (HPC) and Scientific Simulation Research institutions, engineering firms, and industrial enterprises require enormous compute power for supercomputing workloads. Whether it’s computational fluid dynamics (CFD), molecular dynamics, or complex financial modeling – an NVIDIA server from HAPPYWARE delivers the performance needed for accurate, fast results that previously required entire data centers.

- Professional Visualization, 3D Rendering & Digital Twins For creative professionals in architecture, media production, and product design, time is money. With NVIDIA RTX™ GPUs and their RT Cores for real-time ray tracing, you can make photorealistic changes to complex 3D models interactively. Final renderings that once took nights can now be completed in minutes or hours.

The following table provides an overview of recommended GPU configurations for specific application areas:

| Application Area | Recommended GPUs | Min. VRAM | Cluster Requirement | Example Applications |

|---|---|---|---|---|

| Large Language Models (LLM) | B200, H200, H100, A100 | 80 GB | 4–8 GPUs | GPT, BERT, T5, LLaMA |

| Computer Vision | RTX 6000 Ada, L40S, RTX 5090 | 24 GB | 1–4 GPUs | Object detection, image segmentation |

| Natural Language Processing (NLP) | A100, RTX 6000 Ada | 48 GB | 2–4 GPUs | Sentiment analysis, translation |

| AI Inference (Production) | L40S, A30, RTX 5090 | 16 GB | 1–2 GPUs | Real-time AI services, edge AI |

The Path to the Perfect System: Key Factors in Configuration

Selecting the right NVIDIA server is a strategic decision. As an independent expert, HAPPYWARE provides objective, solution-oriented advice. The following aspects are critical to building a system that not only meets but exceeds your expectations:

1. Choosing the Right GPU:

NVIDIA offers a broad portfolio of GPUs, and the right choice depends entirely on your specific workload.

NVIDIA Data Center GPUs (Hopper Architecture):

The ultimate class for the most demanding AI and HPC workloads. The NVIDIA H100—and its successor, the NVIDIA H200 with extremely high memory bandwidth—set the standard for state-of-the-art AI training and cutting-edge HPC applications.

| Model | VRAM | Memory Bandwidth | CUDA Cores | Tensor Cores (Gen) | TDP |

|---|---|---|---|---|---|

| HGX B300 SXM | 288 GB HBM3e | 8.2 TB/s | 37,888 | 1,184 (5th) | 1400 W |

| HGX B200 SXM | 180 GB HBM3e | 8.2 TB/s | 37,888 | 1,184 (5th) | 1000 W |

| H200 SXM | 141 GB HBM3e | 4.8 TB/s | 16,896 | 528 (4th) | 1000 W |

| H100 SXM | 80 GB HBM3 | 3.35 TB/s | 16,896 | 528 (4th) | 700 W |

| A100 SXM | 80 GB HBM2e | 2.0 TB/s | 6,912 | 432 (3rd) | 400 W |

NVIDIA RTX™ GPUs & L-Series:

Deliver excellent price-performance for rendering, AI inference, and as an entry point into advanced AI projects.

The NVIDIA L40S is a versatile data center GPU that combines AI and graphics workloads.

The NVIDIA RTX™ 6000 Ada and NVIDIA RTX PRO 6000 generation are suitable for professional visualisation tasks and are also a good choice for AI development, even when connected via PCIe rather than an SXM socket.

| Model | VRAM | Memory Bandwidth | CUDA Cores | Tensor Cores (Gen) | TDP |

|---|---|---|---|---|---|

| L40S | 48 GB GDDR6 | 864 GB/s | 18,176 | 568 (4th) | 350 W |

| RTX 6000 Ada | 48 GB GDDR6 | 960 GB/s | 18,176 | 568 (4th) | 300 W |

| RTX PRO 6000 | 96 GB GDDR7 | 1.79 TB/s | 24.064 | 752 (5th) | 600 W |

2. Sufficient System Resources and Data Access:

The most powerful GPU can only reach its full potential within a well-balanced overall system. To avoid bottlenecks, all components must be optimally aligned. This includes a high-performance CPU with enough cores and high memory bandwidth, sufficient RAM per GPU to handle temporary data, and fast NVMe SSDs that ensure smooth data flow even with very large datasets.

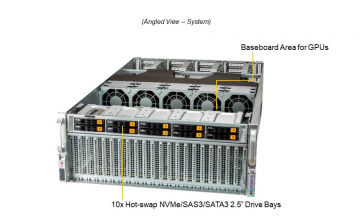

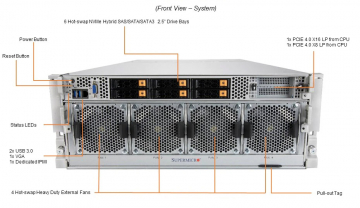

3. Optimized GPU Architecture and Connectivity:

Even the most powerful GPU achieves peak performance only when integrated into a balanced system architecture. Optimal coordination of all components is essential to prevent performance bottlenecks. Key elements include a high-performance CPU with adequate cores and memory bandwidth, sufficient RAM per GPU for intermediate data, and fast NVMe SSDs to maintain smooth data throughput for large-scale workloads.

4. Infrastructure and Stability:

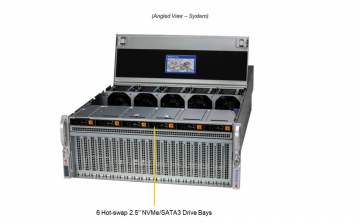

NVIDIA GPUs are high-performance components that require a robust physical infrastructure. Efficient cooling is essential to maintain thermal stability during 24/7 full-load operation. Equally critical is a redundant, high-capacity power supply to reliably meet the GPUs’ significant energy demands. This stability is achieved through specialized server systems offered by NVIDIA-certified partners such as Supermicro, Gigabyte, and Dell. At HAPPYWARE, we integrate these proven components into a certified complete system that delivers maximum efficiency and reliability.

Why a Custom-Configured NVIDIA GPU Server from HAPPYWARE is the Better Choice

The market offers many options. HAPPYWARE provides decisive added value through a partnership-based approach built on over 25 years of experience and certified quality (ISO 9001).

- Maximum Flexibility: Receive a fully tailored solution. Whether you prefer to purchase or lease a server, we select exactly the components (from Supermicro, Gigabyte etc.) that best suit your specific use case.

- Independent, Honest Advice: Our loyalty is to you. We recommend the technology that fits your needs and integrate it into a complete, optimized solution for your GPU servers.

- Flexible Procurement Options: Purchase, lease, or rent – we provide the financing model that aligns with your corporate strategy.

- Comprehensive Service and Support: We offer up to 6 years of extended warranty and Europe-wide on-site service.

Your Future is GPU-Accelerated – Get Started Today!

Implementing an NVIDIA GPU server is a strategic investment in your company’s future readiness. It delivers supercomputing performance directly to your workspace. Accelerate not only your computations but your entire innovation pipeline. The expert team at HAPPYWARE is ready to guide you through the entire process.

Server Configurator Request a quote

Frequently Asked Questions (FAQ) about NVIDIA Servers

- What is the advantage of a custom-configured server compared to a standard system? The key advantage is precise tailoring to your specific workload. While standard systems often require compromises in component selection, a custom configuration ensures that every component—from CPU and RAM to storage—is perfectly aligned with the chosen GPU performance. This eliminates performance bottlenecks and avoids costs associated with overprovisioned hardware. You receive a fully validated, highly optimized system that maximizes the return on investment for your application.

- How much power does an NVIDIA GPU server consume? Power consumption depends heavily on the configuration and can range from approximately 750 watts to several kilowatts. We provide comprehensive guidance on energy efficiency and the requirements for your data center.

- Will I receive a ready-to-use system, or do I need to install the operating system and drivers myself? Our goal is to deliver a turnkey, ready-to-run system. Upon request, we pre-install and configure all common Linux distributions (e.g., Ubuntu, Rocky Linux) or Windows Server. Critical software stacks, including NVIDIA drivers and the CUDA toolkit, are also installed and configured. This allows your team to start working immediately after deployment without spending time on basic system setup.