HPC Clusters - High-Performance Computing Clusters for High Demands

High Performance Computing Cluster Systems, or HPC clusters for short are typically found in technical and scientific environments such as universities, research institutions, and corporate research departments. Often the tasks performed there, such as weather forecasts or financial projections, are broken down into smaller subtasks in the HPC cluster and then distributed to the cluster nodes.

The cluster's internal network connection is important because the cluster nodes exchange a lot of small pieces of information. These have to be transported from one cluster node to another in the fastest way possible. This means, the latency of the network must be kept to a minimum. Another important aspect is that the required and generated data can be read or written by all cluster nodes of an HPC cluster at the same time.

High Performance Computers

Configure and buy high-performance computers for HPC, e.g. for parallel computing with many cores per CPU!

High Performance Server or HPC Workstations for individual purposes for your own computer cluster.

High Performance Storage

Buy HPC storage for your especially important data, e.g. as All Flash Storage with NVMe SSDs.

Storage for stable and scalable file systems e.g. LustreFS, GlusterFS or GFS Red Hat. Our HPC Engineers are happy to help you.

High Performance Networks

We offer high performance network equipment based on:

Buy High Performance Networking (HPN) Equipment online or let our HPC Engineers advise you!

Cluster Computing

Buy Server Cluster or Cluster Nodes for your High performance Cluster consisting of Server Hardware and Cluster Software

We deliver turnkey Cluster solutions including management software or complete Failover Cluster or just the Hardware for your Hadoop cluster or

GPU Computing

High performance GPU computing solutions

We offer GPU server systems, GPU workstations and GPU clusters with NVidia GPUs.

Artificial Intelligence

Our servers for Artificial Intelligence deliver maximum performance for complex applications and efficient data processing.

Rely on future technology - configure your AI server individually now or get advice!

Here you'll find HPC - High Performance Computing

Do you need help?

Simply call us or use our inquiry form.

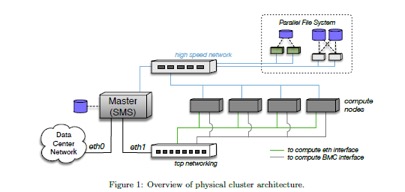

Structure of an High Performance Computing Cluster

- The cluster server, which is called the master or frontend manages the access and provides programmes and the home data area.

- The cluster nodes do the computing.

- A TCP network is used to exchange information in the HPC cluster.

- A high-performance network is required to enable data transmissions with very low latency.

- The High Performance Storage (Parallel File System) enables the simultaneous write access of all cluster nodes.

- The BMC Interface (IPMI Interface) is the access point for the administrator to manage the hardware.

All cluster nodes of an HPC cluster are always equipped with the same processor type of the selected manufacturer. Usually different manufacturers and types are not combined in one HPC cluster. A mixed configuration of main memory and other resources is also possible. However, this should be taken into account when configuring the job control software.

Where should HPC be used?

HPC clusters are most effective if they are used for computations that can be subdivided into different subtasks. An HPC cluster can also handle a number of smaller tasks in parallel. A high-performance computing cluster is also able to make a single application available to several users at the same time in order to save costs and time by working simultaneously.

Depending on your budget and requirements, HAPPYWARE will be happy to build an HPC cluster for you – with various configured cluster nodes, a high speed network, and a parallel file system. For the HPC cluster management, we rely on the well-known OpenHPC solution to ensure effective and intuitive cluster management.

If you would like to learn more about possible application scenarios for HPC clusters, our IT and HPC Cluster expert Jürgen Kabelitz will be happy to help you. He is the head of our cluster department and is available to answer your questions on +49 4181 23577 79.

HPC Cluster Solutions from HAPPYWARE

Below we have compiled a number of possible HPC cluster configurations for you:

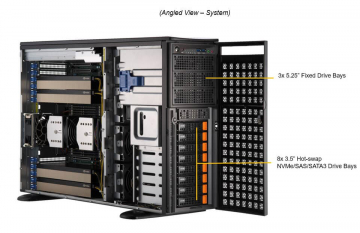

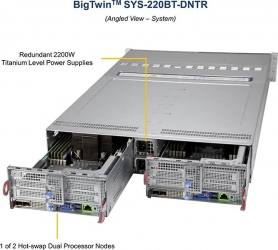

Frontend or Master Server

- 4U cluster server with 24 3.5'' drive bays

- SSD for the operating system

- Dual Port 10 Gb/s network adaptor

- FDR Infiniband adaptor

Cluster nodes

- 12 cluster nodes with dual CPU and 64 GB RAM

- 12 cluster nodes with dual CPU and 256 GB RAM

- 6 GPU computing systems, each with 4 Tesla V100 SXM2 and 512 GB RAM

- FDR Infiniband and 10 GB/s TCP network

High-performance storage

- 1 storage system with 32 x NF1 SSD with 16 TB capacity each

- 2 storage systems with 45 hot-swap bays each

- Network connection: 10 Gb/s TCP/IP and FDR Infiniband

HPC Cluster Management - with OpenHPC and xCAT

Managing HPC clusters and data necessitates powerful software. To meet this we offer two proven solutions with OpenHPC and xCAT.

HPC Cluster with OpenHPC

OpenHPC enables basic HPC cluster management based on Linux and OpenSource software.

Scope of services

- Forwarding of system logs possible

- Nagios Monitoring & Ganglia Monitoring - Open source solution for infrastructures and scalable system monitoring for HPC clusters and grids

- ClusterShell event-based Python library for parallel execution of commands on the cluster

- Genders - static cluster configuration database

- ConMan - Serial Console Management

- NHC - node health check

- Developer software including Easy_Build, hwloc, spack, and valgrind

- Compilers such as GNU Compiler, LLVM Compiler

- MPI Stacks

- Job control system such as PBS Professional or Slurm

- Infiniband support & Omni-Path support for x86_64 architectures

- BeeGFS support for mounting BeeGFS file systems

- Lustre Client support for mounting Lustre file systems

- GEOPM Global Extensible Power Manager

- Support of INTEL Parallel Studio XE Software

- Support of local software with the Modules software

- Support of nodes with stateful or stateless configuration

Supported operating systems

- CentOS7.5

- SUSE Linux Enterprise Server 12 SP3

Supported hardware architectures

- x86_64

- aarch64

HPC cluster with xCAT xCAT is an "Extreme Cloud Administration Toolkit" and enables comprehensive HPC cluster management.

Suitable for the following applications

- Clouds

- Clusters

- High-performance clusters

- Grids

- Data Centre

- Renderfarms

- Online Gaming Infrastructure

- Or any other system configuration that is possible.

Scope of services

- Detection of servers in the network

- Running remote system management

- Provisioning of operating systems on physical or virtual servers

- Diskful (stateful) or diskless (stateless) installation

- Installation and configuration of user software

- Parallel system management

- Cloud integration

Supported operating systems

- RHEL

- SLES

- Ubuntu

- Debian

- CentOS

- Fedora

- Scientific Linux

- Oracle Linux

- Windows

- Esxi

- und etliche andere

Supported hardware architectures

- IBM Power

- IBM Power LE

- x86_64

Supported virtualisation infrastructure

- IBM PowerKVM

- IBM zVM

- ESXI

- XEN

Performance values for potential processors

The performance values used were those of SPEC.ORG. Only the values for SPECrate 2017 Integer and SPECrate 2017 Floating Point are compared:

| Hersteller | Modell | Prozessor | Taktrate | # CPUs | # cores | # Threads | Base Integer | Peak Integer | Base Floatingpoint | Peak Floatingpoint |

|---|---|---|---|---|---|---|---|---|---|---|

| Giagbyte | R181-791 | AMD EPYC 7601 | 2,2 GHz | 2 | 64 | 128 | 281 | 309 | 265 | 275 |

| Supermicro | 6029U-TR4 | Xeon Silver 4110 | 2,1 GHz | 2 | 16 | 32 | 74,1 | 78,8 | 87,2 | 84,8 |

| Supermicro | 6029U-TR4 | Xeon Gold 5120 | 2.2 GHz | 2 | 28 | 56 | 146 | 137 | 143 | 140 |

| Supermicro | 6029U-TR4 | Xeon Gold 6140 | 2.3 GHz | 2 | 36 | 72 | 203 | 192 | 186 | 183 |

NVIDIA Tesla V100 SXM2 64Bit 7.8 TFlops; 32Bit 15.7 TFlops; 125 TFlops for tensor operations.

HPC Clusters and more from HAPPYWARE - Your answer for powerful cluster solutions

HAPPYWARE is your specialist for individually configured and high-performance cluster solutions – whether it‘s GPU clusters, HPC clusters, or other setups. We would be happy to build a system that meets your company's needs at a competitive price.

We are able to offer special discounts for scientific and educational organisations. If you would like to know more about our discounts, please contact us.

If you would like to know more about the equipment of our HPC clusters or you need an individually designed cluster solution, please contact our cluster specialist Jürgen Kabelitz on +49 4181 23577 79. We will be happy to help.