NVIDIA HGX: The Ultimate Reference Platform for AI and HPC

The world of artificial intelligence (AI) is being transformed by large language models (LLMs) and generative AI. These advanced applications demand an almost unimaginable level of computing power.

Precisely for this challenge, the NVIDIA HGX platform was developed. NVIDIA HGX is a standardized server platform for AI supercomputing in the data center. It combines high-performance GPUs with NVLink and NVSwitch technology and serves as a reference architecture for scalable AI and HPC workloads.

As your long-standing partner for customized server and high-performance computing (HPC) solutions, we turn the complexity of this technology into a clear competitive advantage. We show you how to harness the enormous power of HGX optimally for your objectives.

Take the next step into your AI future. Speak with our AI and HPC specialists today to configure your tailored NVIDIA HGX solution.

Here you'll find NVIDIA HGX Server

Do you need help?

Simply call us or use our inquiry form.

What is NVIDIA HGX? More Than the Sum of Its Parts

The NVIDIA HGX platform is the technological answer to a central challenge in modern multi-GPU systems: the bandwidth limitation of the PCIe bus.

In conventional server architectures, the PCIe bus (Peripheral Component Interconnect Express) serves as the primary interface for high-performance components. However, in compute-intensive AI and HPC applications that rely on parallel processing with multiple GPUs, the shared bandwidth of this bus becomes the limiting factor. Extensive data transfers between GPUs (peer-to-peer communication) lead to high latency and bus saturation. As a result, the powerful compute units of the GPUs cannot be fully utilized, as they are forced to wait for data—reducing overall system efficiency.

This is precisely where the NVIDIA HGX platform comes in. It is not a finished product but rather a standardized reference architecture that forms the foundation of the most powerful NVIDIA GPU servers for AI and HPC.

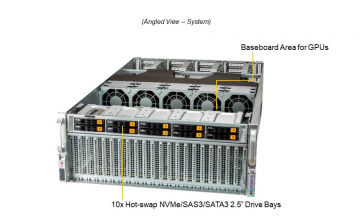

Technically speaking, NVIDIA HGX is a highly integrated server platform (a so-called baseboard or reference design) that interconnects multiple NVIDIA Tensor Core GPUs (typically 4 or 8) via extremely fast links.

The goal of this architecture is to enable the GPUs to act as a single, massive super-GPU. This maximizes data exchange and accelerates the training of the most complex AI models as well as demanding HPC simulations.

HGX at a Glance:

- Problem: The PCIe bus slows down communication between multiple GPUs.

- Solution: HGX creates a direct, ultra-fast connection between all GPUs, allowing them to operate as one.

- Result: Maximum overall performance for AI and HPC.

The Key Technologies for Maximum NVIDIA HGX Performance

The outstanding performance of an HGX system is based on the perfect interaction of three key technologies developed by NVIDIA to eliminate communication bottlenecks.

- NVIDIA Tensor Core GPUs (e.g., H100, H200): The NVIDIA H100 Tensor Core GPU, based on the Hopper architecture, is the current standard for AI training. The newer NVIDIA H200 Tensor Core GPU offers massively increased and faster memory capacity, making it ideal for the largest LLMs and HPC datasets.

- NVIDIA NVLink™: This is a proprietary high-speed interconnect that establishes a direct point-to-point connection between GPUs. Unlike the PCIe bus, whose shared bandwidth is a bottleneck for parallel workloads, NVLink offers exclusive and significantly higher data transfer rates. This dedicated connection is crucial for minimizing latency and maximizing data exchange in GPU-to-GPU communication.

- NVIDIA NVSwitch™: Acting as an intelligent high-speed switch for NVLink traffic, the NVSwitch ensures that every GPU can communicate with every other GPU on the platform simultaneously at full bandwidth (all-to-all communication). On an NVIDIA HGX H100 8-GPU platform, this enables an aggregated bandwidth of up to 3.6 TB/s—many times what is possible with PCIe.

This trio transforms a collection of individual GPUs into a coherent, highly efficient supercomputer ready for the most demanding tasks.

The Key Difference: NVIDIA HGX vs. DGX

One of the most common questions we are asked in consultations concerns the difference between HGX and DGX. This distinction is central to your purchasing decision.

- NVIDIA HGX is the standardized reference architecture. As a system integrator, HAPPYWARE uses this architecture as the foundation to build your customized, NVIDIA-certified system from components by leading manufacturers such as Supermicro, Gigabyte, Dell, and others. HGX provides maximum flexibility in configuring CPU (including AMD EPYC), memory, storage, and networking components.

- NVIDIA DGX is NVIDIA’s turnkey product. It is a fully integrated hardware and software system delivered directly by NVIDIA. A DGX system is based on the HGX platform but is a closed, NVIDIA-optimized “appliance” with a fully accelerated software stack (NVIDIA AI Enterprise) and direct NVIDIA support.

| Feature | NVIDIA HGX Server from HAPPYWARE | NVIDIA DGX System |

|---|---|---|

| Concept | Flexible platform / reference design | Turnkey complete system |

| Flexibility | High: CPU, RAM, storage, networking freely configurable | Low: Fixed, NVIDIA-optimized configuration |

| Customization | Tailored to specific workloads and budgets | Standardized for maximum out-of-the-box performance |

| Manufacturer | Various OEMs (Supermicro, Gigabyte, etc.) | Exclusively NVIDIA |

| Support | Through the system integrator (e.g., HAPPYWARE) and OEM | Directly through NVIDIA Enterprise Support |

NVIDIA DGX Alternatives: Tailored Performance for Your Budget

In addition to NVIDIA’s turnkey DGX systems, HAPPYWARE offers a wide portfolio of alternative AI server solutions tailored to different budgets and performance needs. These alternatives deliver performance on par with NVIDIA DGX systems, often at 30–50% lower cost, helping to meet the strict budget requirements of AI projects.

With customized configuration and support services for each brand, we help you select the exact AI server solution that fits your use case.

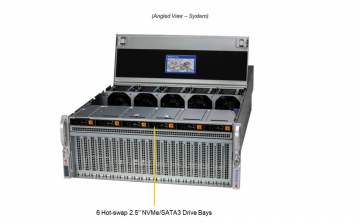

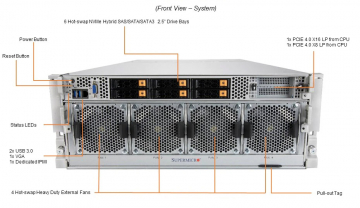

- Supermicro AI Servers: Models such as the Supermicro SYS-420GP-TNAR and SYS-740GP-TNRT support up to eight NVIDIA H100 or A100 HGX GPUs and represent a powerful alternative to the DGX A100. These systems provide excellent value for money, particularly for large AI training projects.

- Gigabyte AI Servers: Models like the G292-Z40, G482-Z50, and G292-Z20 deliver high-performance solutions for medium to large AI projects, supporting between 4 and 10 GPUs depending on the configuration. Gigabyte systems are particularly well-suited for research institutions and universities thanks to their flexibility.

- Dell AI Servers: Models such as the PowerEdge XE8545, PowerEdge R750xa, and PowerEdge C6525 target entry- to mid-level AI projects and support two to four GPUs. Dell systems are considered extremely reliable AI server alternatives, particularly in established enterprise environments.

The Evolution of Performance: From A100 to H100/H200 to Blackwell

The HGX platform evolves with each GPU generation to meet the exponentially growing demands of AI.

- HGX A100: The established gold standard of the Ampere generation, enabling countless AI breakthroughs.

- HGX H100: A quantum leap with the Hopper architecture. Features the “Transformer Engine” for dramatically faster training of large language models and FP8 precision for unmatched performance and efficiency.

- HGX H200: A variant of the H100, focused on maximum memory capacity. With up to 141 GB of HBM3e memory per GPU, it massively accelerates inference for enormous models.

- Coming Soon: HGX B200 and B300 / NVIDIA Blackwell: The next generation, NVIDIA Blackwell, promises with the HGX B200 even greater performance, higher density, and a new generation of NVLink to further advance scaling for AI factories and exascale computing.

At HAPPYWARE, we ensure you always have access to the latest NVIDIA technologies, enabling future-proof investments.

Use Cases and Benefits: Who Should Invest in NVIDIA HGX Server?

Investing in an HGX-based system is a strategic decision for companies and research institutions at the forefront of technological development.

Top Benefits at a Glance:

- Maximum Performance: Eliminate GPU communication bottlenecks and reduce training times from months to days.

- Linear Scalability: Start with an 8-GPU system and scale up to massive clusters by combining multiple systems with ultra-fast networking (e.g., NVIDIA Quantum InfiniBand).

- Greater Efficiency: A single, optimized HGX system can replace many smaller servers, reducing total cost of ownership (TCO) in terms of space, power, and management in the datacenter.

- Future-Proofing: Invest in a proven industry standard supported by a vast software ecosystem (e.g., NVIDIA AI Enterprise, NVIDIA NGC).

Typical Users and Workloads:

- Most demanding AI workloads: Training, fine-tuning, and inference of LLMs, image, and video models (generative AI).

- High-Performance Computing (HPC): Complex simulations in science, molecular dynamics, weather forecasting, and finance.

- Research & Development: Researchers and scientists in universities and private labs who need dedicated compute resources for their datasets.

- Industry 4.0 & Automotive: Development of autonomous driving systems, digital twins, and complex optimization models.

Our expert recommendationfor NVIDIA HGX Clusters:

- A single NVIDIA HGX server operates as a logical “super-GPU” thanks to NVLink™ and NVSwitch™, providing aggregated GPU memory capacity and massively increased interconnect bandwidth. However, for exascale AI/ML/DL workloads—such as Large Language Models (LLMs) with hundreds of billions of parameters—a single node is insufficient.

- In these cases, multiple HGX cluster nodes are deployed in rack or multi-rack architectures. When interconnected via ultra-high-speed fabrics like NVIDIA Quantum-2 InfiniBand or Spectrum-X800 RoCE Ethernet (both 800 Gb/s), they deliver linearly scalable GPU clusters capable of extreme distributed parallelism with minimal latency. This HGX cluster architecture thus forms the foundation for a future-proof, scalable supercomputing infrastructure for AI and HPC.

- For optimal efficiency, cooling, and cost savings, we recommend deploying NVIDIA HGX clusters with Liquid Cooling Data Center or Direct Liquid Cooling (DLC). This is especially critical for high-density systems powered by NVIDIA H100, as well as the next-generation H200-, B200-, and B300-GPU servers, where thermal management and energy efficiency directly translate into sustained performance and lower TCO.

Practical Check: What to Consider Before Deploying an Server NVIDIA HGX

A system combining the full power of eight NVIDIA H100 GPUs places significant demands on your datacenter infrastructure. At HAPPYWARE, our experts provide in-depth guidance on these critical points:

- Power: A fully equipped HGX H100 8-GPU system can consume 10 kW or more. This requires careful planning of power distribution (PDUs) and rack-level protection.

- Cooling: The extreme power density generates substantial heat. While powerful air-cooled solutions exist, Direct Liquid Cooling (DLC) or liquid cooling is increasingly becoming the standard for HGX systems to maintain peak performance and maximize density in the datacenter.

- Space and Weight: HGX servers are typically large (often 4U or more) and heavy, which must be taken into account when planning racks.

Your Individual NVIDIA HGX Solution – Configured by HAPPYWARE

The NVIDIA HGX platform provides the technological foundation. At HAPPYWARE, we turn this foundation into a tailored, production-ready, and reliable solution built precisely for your needs.

Since 1999, we have been supporting companies across Europe with customized server and storage systems. Benefit from our ISO 9001-certified quality and deep technical expertise.

Our Service for You:

- Expert Consulting: We analyze your workload and recommend the optimal configuration—from CPU and memory to networking.

- Custom Manufacturing: Every GPU server with NVIDIA HGX is built in-house according to your specifications and undergoes rigorous testing.

- Infrastructure Planning: We support you in planning the necessary power and cooling capacities.

- Comprehensive Support: With Europe-wide on-site service and up to 6 years of extended warranty, we secure your investment for the long term.

Take the next step into your AI future. Talk to our HPC and AI specialists today to configure your individual NVIDIA HGX solution.

Frequently Asked Questions (FAQ) about NVIDIA HGX

What is the main advantage of NVLink and NVSwitch compared to PCIe?

The key advantage is bandwidth and direct communication. NVLink provides a dedicated, extremely fast connection between GPUs. NVSwitch enables all GPUs to communicate simultaneously, avoiding the “traffic jam” that occurs with shared PCIe lanes. The result is accelerated application performance.

Does HAPPYWARE also offer HGX systems with the new NVIDIA H200 GPUs?

Yes. We work closely with our partners to always offer you the most advanced technologies. Systems based on the NVIDIA HGX H200 platform are ideal for customers with workloads requiring maximum memory capacity and bandwidth per GPU. Contact our sales team for current availability and configurations.

Can I get an HGX system with AMD EPYC™ CPUs?

Yes, this is one of the major benefits of the flexible HGX platform. We can configure your system with either the latest Intel® Xeon® processors or high-performance AMD EPYC™ CPUs, depending on which architecture best suits your specific use case.

Is an HGX system better for deep learning than a standard GPU server?

For training large deep learning models, particularly large language models, an HGX system is significantly superior. The high interconnect bandwidth is critical when the model and datasets need to be distributed across multiple GPUs—which is the case with nearly all modern, complex models.